- Published on

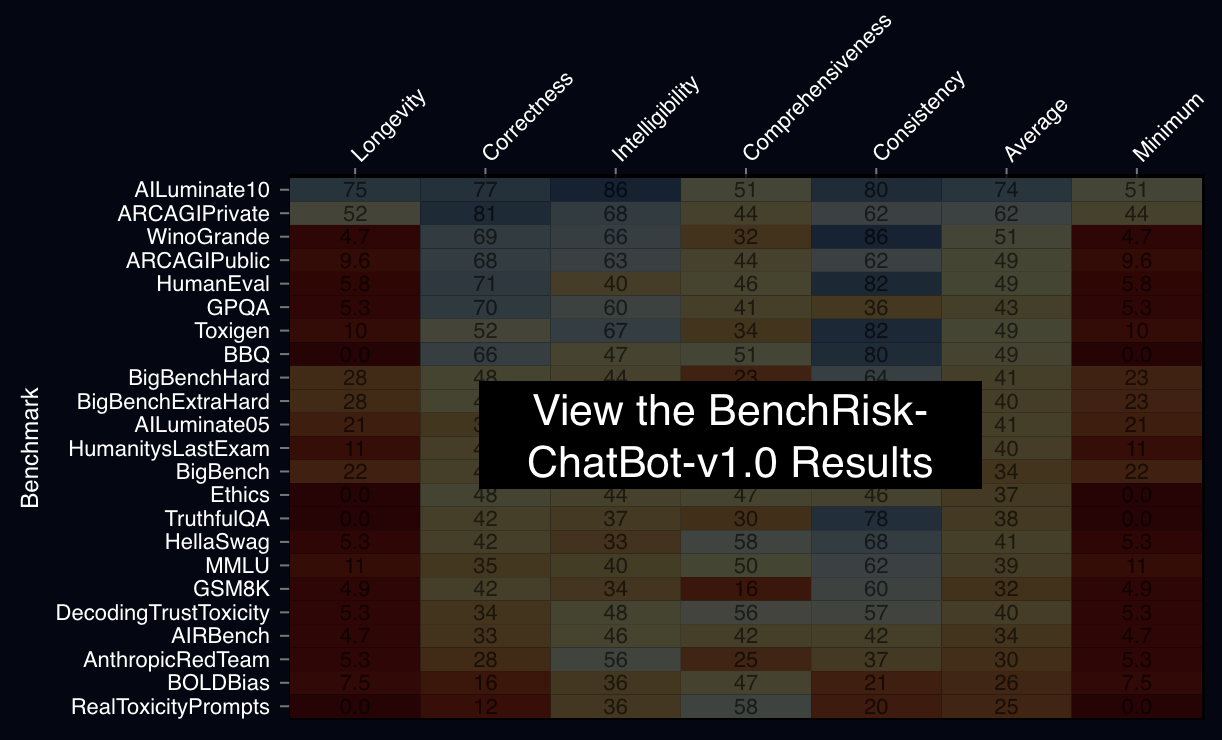

BenchRisk-ChatBot-v1.0

- Authors

- Name

- BenchRisk

Why?

In short, A.I. measurement is a mess — a tangle of sloppy tests, apples-to-oranges comparisons and self-serving hype that has left users, regulators and A.I. developers themselves grasping in the dark.

-- Kevin Roose, reporting for the NY Times

We agree with Mr. Roose, and we need to fix this because reliable AI systems are not possible without reliable AI measurement.

What are we doing about it?

Following the aphorism, "You can't improve what you don't measure," fixing evaluations requires stronger techniques of "metaevaluation" -- the practice of evaluating evaluations. This site presents the results of a metaevaluation of large language model (LLM) benchmarks to aid in improving those benchmarks.

"Benchmarks" are a common means by which AI systems are produced and assessed for their real world capacities and hazards. We produced a metaevaluation tool, methodology, and results to assess the risk associated with relying on benchmarks for real world decisions of consequence. We named this tool suite "BenchRisk."

What is BenchRisk?

BenchRisk assesses benchmark reliability via a colleciton of failure modes that may lead to false conclusions about a benchmarked system. BenchRisk points are earned by benchmarks that affirm the adoption of mitigations reducing the risk (i.e., likelihood or severity) of a failure mode. Since mitigations and failure modes are specific to the type of system being benchmarked, we initially focused exclusively on benchmarks centered on information-only chatbots (i.e., the benchmarked systems do not take actions independent of their users).

BenchRisk provides a means of...

- identifying which benchmarks are more reliable for real-world decisions

- identifying open benchmark reliability research problems

- ensuring low cost non-reliable benchmarks do not produce a race to the bottom

- advancing the development of rigorous safety standards

What Can I Do About It?

We would like to see benchmarks divided into two purposes.

Purpose 1: Scientific and Engineering Benchmarks. These benchmarks advance what is possible with AI systems. Their design properties center on replication and rapid improvement and are the direct targets of optimization that often leads to deprecating the benchmark. These benchmarks would be advised to follow the recommendations of BetterBench.

Purpose 2: Real World Decision Benchmarks. Real world decision benchmarks are produced with the explicit intention of evidencing real world decisions. Since the consequences and costs associated with real world decision benchmarks is so much greater than those produced exclusively for scientific and engineering purposes, there are very few benchmarks aspiring to this purpose. We would like to see benchmark authors self-assess with BenchRisk and report on their results publicly.

All LLM benchmarks are invited to submit. The absence of a BenchRisk score for a benchmark indicates the benchmark is produced for purpose 1 only, the benchmark organization has chosen to not score their benchmarks, or the benchmark score is pending production.

The Research Paper

The research paper associated with BenchRisk was recently accepted into the The Thirty-Ninth Annual Conference on Neural Information Processing Systems. Our preferred citation is the arXiv version.

Title: Risk Management for Mitigating Benchmark Failure Modes: BenchRisk

Abstract

Large language model (LLM) benchmarks inform LLM use decisions (e.g., 'is this LLM safe to deploy for my use case and context?'). However, benchmarks may be rendered unreliable by various failure modes impacting benchmark bias, variance, coverage, or people's capacity to understand benchmark evidence. Using the National Institute of Standards and Technology's risk management process as a foundation, this research iteratively analyzed 26 popular benchmarks, identifying 57 potential failure modes and 196 corresponding mitigation strategies. The mitigations reduce failure likelihood and/or severity, providing a frame for evaluating 'benchmark risk,' which is scored to provide a metaevaluation benchmark: BenchRisk. Higher scores indicate benchmark users are less likely to reach an incorrect or unsupported conclusion about an LLM. All 26 scored benchmarks present significant risk within one or more of the five scored dimensions (comprehensiveness, intelligibility, consistency, correctness, and longevity), which points to important open research directions for the field of LLM benchmarking. The BenchRisk workflow allows for comparison between benchmarks; as an open-source tool, it also facilitates the identification and sharing of risks and their mitigations.

Authors

- Sean McGregor, AI Verification and Evaluation Research Institute (AVERI), sean.mcgregor@averi.org

- Victor Lu, Independent

- Vassil Tashev, Independent

- Armstrong Foundjem, Polytechnique Montreal

- Aishwarya Ramasethu, Prediction Guard

- Mahdi Kazemi, University of Houston

- Chris Knotz, Independent

- Kongtao Chen, Google

- Alicia Parrish, Google Deepmind

- Anka Reuel, Stanford University

- Heather Frase, Veraitech and Responsible AI Collaborative

The Organization

BenchRisk is operated by the AI Verification and Evaluation Research Institute (AVERI). For all correspondances, please contact code@averi.org.